UIUX Design VR Training for Robotic Operating Room

(view article)

System Requirement: The training scenario involves interacting with a virtual surgeon and a virtual non-sterile staff member to troubleshoot an instrument arm by attempting to restart the system, using a special tool to open the instrument’s jaws, removing the instrument, stowing the arm, and disabling it.

Discovery & Research

-

Stakeholder Interviews: Gathered detailed requirements from subject matter experts (surgeons, trainers, engineers).

-

User Research: Identified target users as surgical trainees and OR staff with varying levels of VR experience.

-

Competitive Analysis: Analyzed similar VR medical training tools to identify usability strengths and gaps.

Da Vinci Robotic OR

An advanced robotic platform designed to enhance the capabilities of surgeons performing minimally invasive surgeries. The system includes a set of robotic arms, a high-definition 3D camera, and a surgeon's console that allows for precise control of surgical instruments. This technology offers greater dexterity, precision, and control than traditional laparoscopic surgery, allowing surgeons to perform complex procedures through small incisions with improved accuracy and reduced patient recovery time. The Da Vinci system is widely used across various specialties, including urology, gynecology, cardiothoracic, and general surgery, providing patients with the benefits of shorter hospital stays, less pain, and faster recovery.

Scenario Mapping

-

Task Flow Diagram: Broke down the interaction into sequential tasks with system and user actions:

-

Engage with virtual surgeon and non-sterile staff

-

Navigate to instrument arm

-

Attempt system restart

-

Open jaws using tool

-

Remove instrument

-

Stow the arm

-

Disable the arm

-

-

Interaction Mapping: Defined which actions would use:

-

Gaze and gesture tracking

-

Haptic controllers (for tools and jaw manipulation)

-

Voice commands (for communication with staff NPCs)

-

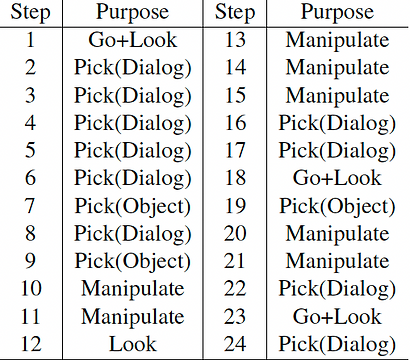

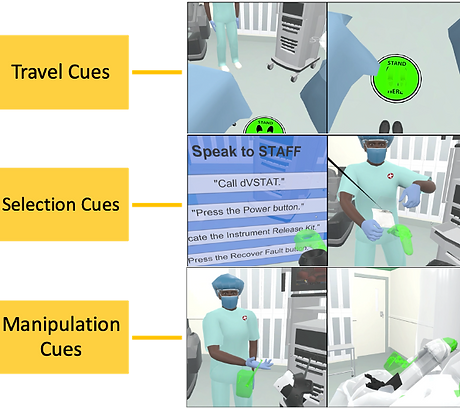

To accomplish the training scenario provided by INTUITIVE surgical, the VR training application designed in this article includes 24 steps that require various actions of the user. After pilot tests we decided that the application uses dialog buttons for conversing with the virtual agents. For each step, the application uses an interaction cue to guide the user through the troubleshooting scenario. Each cue employs subtle verbal instructions from one of the virtual agents for feedforward and integrated visual animations as perceived affordances. All the cues are scenario-triggered.

Prototyping

-

Low-Fidelity Wireframes (3D Sketches): Rapidly mocked up key scenes in Unity using grayboxing:

-

System terminal interface

-

Instrument arm interaction zones

-

NPC proximity indicators

-

-

NPC Dialogue Trees: Designed basic branching dialogues for surgeon and staff, contextual to task progress.

Define and Ideate the Prototype

Different Tasks

The application’s training scenario involves interacting with a virtual operating room (OR) team to troubleshoot the surgical robot after an instrument failure. To accomplish the scenario, the application requires the user to complete a variety of steps, including moving about the OR (i.e., travel via real walking), selecting dialog options and picking up tools (i.e., selection via virtual hand), and interacting with instruments and the surgical robot(i.e., manipulation via virtual hand). The scenario involves a total of 24 steps, including 4 travel tasks, 13 selection tasks, and 7 manipulation tasks.

UI/UX DesignSpatial UI:

Created HUD elements and diegetic interfaces (e.g., tool overlays, arm status indicators).Accessibility: Included visual cues (high contrast, glow highlights), audio prompts, and motion comfort settings.Error Prevention & Recovery:Confirmations for critical actions (e.g., “Disable Arm?”)Clear feedback on incorrect sequences (e.g., attempting removal before jaws are opened)

Usability TestingTest Sessions:

Ran studies with internal testers and clinical trainees.

Findings:Users preferred floating menus and direct interactions.

Gestures needed clearer onboardingVisual feedback helped reduce task time by 30%

User Research Conducted to Evaluate the Prototype

For each metric, I conducted a three-way (session, purpose, timing), mixed (session and purpose within, timing between), repeated measures analysis of variance (RM-ANOVA) at a 95% confidence level. The Shapiro-Wilk test of normality was used to ensure results were approximately normally distributed. Degrees of freedom were corrected using Greenhouse-Geisser estimates of sphericity when Mauchly’s test of sphericity indicated that the assumption of sphericity had been violated. Bonferroni corrections and post hoc tests were used to correct for Type I errors in the repeated measures and to identify significantly different conditions when a significant main effect was found.

Outcome:

-

Delivered a VR training prototype with intuitive interactions, realistic feedback, and modular NPC support.

-

Improved task completion accuracy and reduced training time through thoughtful UX design.